Version 1.6 dated 1 December 2025

Introduction to Automation and System Integration in Information Security

To our surprise, in decades of work in the field of information security, we have not come across a sufficiently comprehensive and consistent, and at the same time concise overview of software and hardware solutions in the field of cybersecurity, their classification, description of interrelationships and evolution. Therefore, we decided to create such an overview primarily for our internal goals.

Having traveled the paths of auditors, managers, developers, consultants, and entrepreneurs in enterprise security, we have become convinced that the basics of security automation and systems integration are essential not only for strategic management or security architecture development, but also for security assessment, risk management, data and application protection, operational security, and many other branches of modern cybersecurity. We intend to continually maintain, revise, and supplement this overview with your help.

This approach is consistent with our philosophy, as we at H-X Technologies are committed to not only providing you with effective services and unique solutions in information security, but also to sharing both our experience and the latest advances in the field.

Challenges of Automating Cybersecurity

Many details in the vast and multifaceted world of information security are key to ensuring the reliable protection of information and the systems that process it. It is a difficult task to take into account all these details and not miss anything. By the way, for the sake of simplicity, here we use the terms “information security” and “cybersecurity” as synonyms, although they are slightly different.

There is no universal solution to all information security problems, as security is not a state, but a process. Above all, it is constant painstaking manual work and adherence to rules. When technologies are properly combined, they greatly facilitate many security tasks, from security assessment to response to security incidents.

A separate problem in modern information security is the abundance of obscure acronyms. It is quite difficult to memorize them and figure out which ones are protocols, algorithms, frameworks, standards, methods, or technologies, and which ones are types and classes of hardware and software solutions or trademarks.

Finally, it is not always clear how modern and relevant a particular security technology is, as well as what place it occupies in the modern security industry, how it relates to other technologies, and what its advantages and disadvantages are. And most importantly, how fundamental and promising each technology is, and whether it is worth investing effort, money, time and other resources in its development and implementation.

Our solutions

Here is a review of software and hardware solutions for information security. It gives you an overview of 131 classes of cybersecurity solutions and technologies, grouped into 15 categories. This categorization covers a wide range of tools and techniques, from data protection and cryptographic solutions, to event correlation systems and risk management.

For simplicity, in this work we use as synonyms the concepts of methods, methodologies and technologies in information security. On the other hand, we also use means, tools, systems, and solutions as synonyms to refer to more specific realizations of these technologies and methods.

While honoring the role of scientific research in technological progress, we also believe in the driving force of the market. In the development of technology, people often “vote with their wallets” for certain technologies. While the marketing activities of solution providers set the tone for shaping the direction of technology development, the final decision on how it will evolve is made by its users, who invest their time, attention and money in one solution or another.

Therefore, given that there are quite a few different methods, tools and technologies in cybersecurity, we focus primarily on popular classes of enterprise software and hardware solutions, down to products and services, and secondarily on the technologies underlying these solutions. Some of these solutions and technologies are multipurpose and are used not only in security, but also in related areas. For example, in configuration or performance management, as well as in other IT and business processes.

This work may be one of your entry points into the world of information security. Hopefully, it will serve you as a textbook, reference, or even a desktop guide, especially if you are actively involved in cybersecurity solutions or technologies.

In either case, our work will help you not only to get an overview of automation in information security, but also to come closer to understanding which services or tools might be suitable for protecting a particular digital environment.

Thus, the main goal of this work is to methodically classify popular hardware and software solutions in the field of information security, to show their interrelationship and development, to describe common abbreviations of their names (acronyms), and to consider how these solutions help to solve actual problems of information security, mainly – corporate security.

A Race of Threats and Defenses

What exactly do information security solutions protect against? For a consistent and in-depth understanding of the development of information security solutions, it makes sense to first consider the retrospective and evolution of information security threats.

With the emergence and development of computers and computer networks in the mid-twentieth century, each new decade, the threats of technological offenses, problems of confidentiality, integrity and availability of information acquired qualitatively new forms and levels:

- 1950s: Large-scale information security (IS) incidents were rare, as computer technology was just beginning to evolve. The main focus was on physical security and protecting information from Cold War spying.

- 1960s: As computer technology and network communications advanced, the first cases of unauthorized access to computer systems emerged. Hackers began to explore ways to penetrate systems and networks, although these actions were often motivated more by curiosity than malice. The concept of “information security incident” did not yet have a modern meaning.

- 1970s: Appearance of the first prototypes of computer viruses and antiviruses – self-propagating programs Creeper and Reaper. Development of telephone fraud. For example, one of the world’s first hackers, John Draper (known as Cap’n Crunch), used a cereal box whistle to imitate the tones of the AT&T telephone network to gain free access to long-distance calls.

- 1980s: Computer viruses and hacking actively developed. Cyberattacks began to be used in political and ideological conflicts. There is a version that in 1982, the U.S. added a software tab to a pipeline control system borrowed from the Soviet Union, causing a 3-kiloton explosion and a fire in Siberia. In the late 1980s, German hacker Markus Hess, recruited by the KGB (the Committee for State Security in the Soviet Union), hacked into computers at U.S. universities and military bases, highlighting the vulnerability of critical systems. In 1988, Robert Morris launched the first computer worm for the ARPANET, which paralyzed about 10% of the computers connected to the network. Robert Morris became the world’s first person convicted of a computer crime.

- 1990s: Cybercrime and cyber spying grow. First cases of phishing and DDoS attacks. With the expansion of the Internet and the emergence of new services such as e-mail, social networking, online gaming, etc., there were more opportunities for criminals who tried to gain profit or information from these resources. In 1994, Vladimir Levin (known as Hacker 007) stole about $10 million from Citibank using Internet access to its network. In 1998, two California teenagers carried out a major attack on the Pentagon called Solar Sunrise.

- 2000s: The methods of cybercriminals continue to evolve rapidly. Botnets – networks of compromised computers used by hackers to steal information, send spam, DDoS attacks, disguise hacks and other purposes – have emerged. The 2007 cyberattack on Estonia was a major example of a politically motivated cyberattack. With the development of social media and mobile technologies, a new form of expressing one’s opinion or discontent has emerged – cyber activism and cyber protests. Some groups and individuals have begun to use cyberattacks as a means of gaining attention or demonstrating their position. In 2008, the hacktivist group Anonymous launched Operation Chanology against the Church of Scientology using DDoS attacks, website hacks, and other methods.

- 2010s: First thefts of cryptocurrencies, threats to the Internet of Things (IoT), and supply chain attacks. Crypto miners have emerged, utilizing computer resources to mine cryptocurrencies. Cyber blackmail based on ransomware that encrypts user data and demands a ransom for its recovery has rapidly developed. In 2017, the global WannaCry ransomware attack infected more than 200,000 computers in 150 countries, demanding users pay between $300 and $600 in bitcoins to unlock their files.

- 2020s: Phishing attacks and other methods of social engineering have evolved and begun to utilize artificial intelligence. For example, deepfakes are realistic audio and video for misinformation or fraud. Attacks on removed employees have gained popularity due to the coronavirus pandemic. States’ cyber espionage and cyberwar activities have increased. Supply chain attacks continued to evolve. The attack on network and security solutions provider SolarWinds in 2020 turned out to be a large-scale cyber espionage operation affecting many government agencies and private companies in the United States and other countries.

Today, almost every organization has faced a cyber problem. About 83% of organizations discover data breaches in the course of their operations. This underscores the critical need to develop and implement reliable information protection solutions, including hardware and software solutions. They are developing, practically keeping pace with the development of threats. The growth rate of damage to the global economy from information security incidents is comparable to the growth rate of the information security solutions market.

While information security threats are becoming more complex, the functions of hardware and software solutions are also actively evolving. They protect against threats not only when an attack occurs, but also at increasingly distant points in time. Security technologies are addressing the earliest prerequisites and conditions for vulnerabilities, leveraging advanced technologies such as cloud, blockchain and neural networks, improving system visibility and analytics, and offering increasingly sophisticated risk mitigation and compensation.

Thus, understanding the basics and trends of information security automation becomes a necessity not only for IT and cybersecurity professionals, but also for organizational leaders and even for a wide range of users seeking to secure their data in the digital age, including security assessment of their data, computers, smartphones, applications, as well as the security quality of IT service providers and products.

Groups of hardware and software solutions

The way we have adopted to structure sections and classes of solutions is not a dogma. For example, some solutions may be assigned to several groups at once, while others may require separate sections. Our method of structuring is based on considerations of ease of learning. We adhere to the principles of Occam’s Razor and “simple to complex” and the assumption that the simpler and earlier the technologies and tools are, the more familiar they are to the reader, and the easier it is for the reader to build up a picture in their mind.

The structure of the review:

- Cryptographic solutions: EPM, ES, FDE, HSM, KMS, Password Management, PKI, SSE, TPM, TRSM, ZKP.

- Data Security: DAG, Data Classification, Data Masking, DB Security, DCAP, DLP, DRM, IRM, Tokenization.

- Threat Detection: CCTV, DPI, FIM, HIDS, IDS, IIDS, NBA, NBAD, NIDS, NTA.

- Threat Prevention: ATP, AV, HIPS, IPS, NGAM, NGAV, NGIPS, NIPS.

- Corrective and compensatory solutions: Backup and Restore, DRP, Forensic Tools, FT, HA, IRP, Patch Management Systems.

- Deception Solutions: Deception Technology, Entrapment and Decoy Objects, Obfuscation, Steganography, Tarpits.

- Identity and Access Management: IDaaS, IDM, IGA, MFA, PAM, PIM, PIV, SSO, WAM.

- Network access security: DA, NAC, SASE, SDP, VPN, ZTA, ZTE, ZTNA.

- Network security: ALF, CDN, DNS Firewall, FW, NGFW, SIF, SWG, UTM, WAAP, WAF.

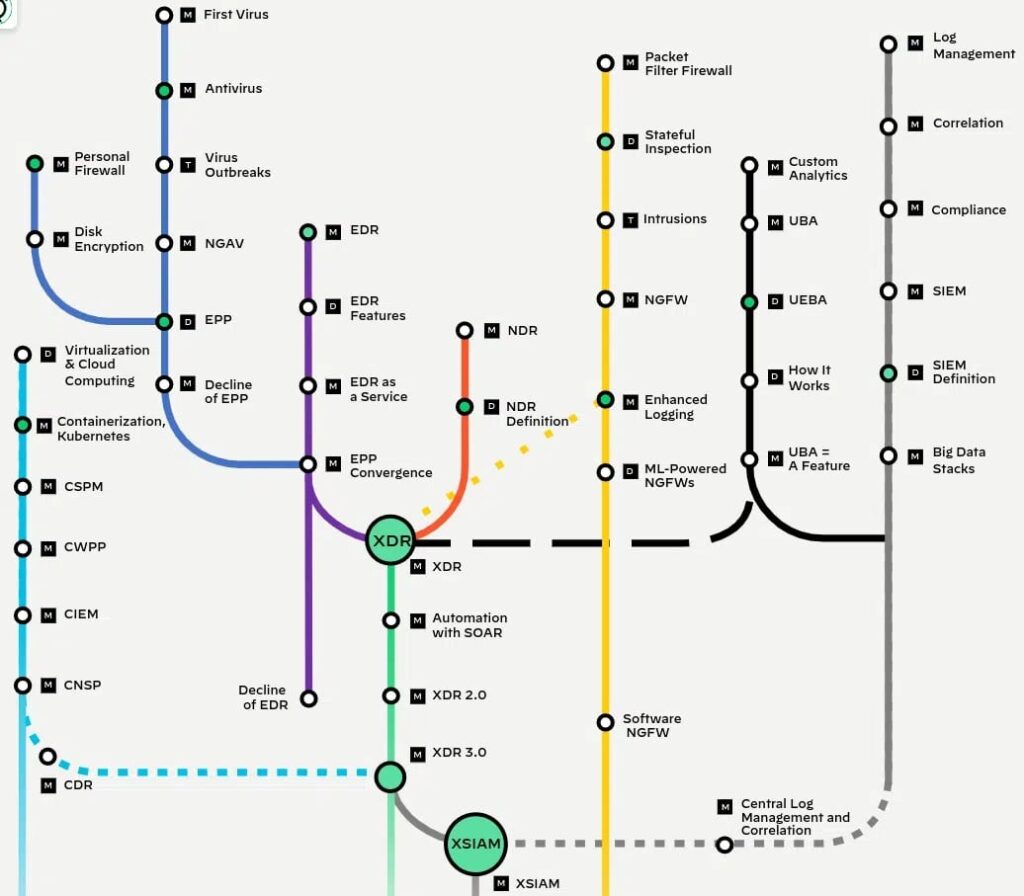

- Endpoint Management: EDR, EMM, EPP, MAM, MCM, MDM, MTD, UEM, UES.

- Application Security and DevSecOps: CI/CD, Compliance as Code, Container Security Solutions, IaC, Policy as Code, RASP, Sandboxing, SCA, Secrets Management Tools, Threat Modeling Tools.

- Vulnerability Management: CAASM, DAST, EASM, IAST, MAST, OSINT Tools, PT, SAST, VA, VM, Vulnerability Scanners.

- Cloud Security: CASB, CDR, CIEM, CNAPP, CSPM, CWPP.

- Security information and event management: DRPS, LM, MDR, NDR, NSM, SEM, SIEM, SIM, SOAR, TIP, UEBA, XDR.

- Risk Management: CCM, CMDB, CSAM, GRC, ISRM, Phishing Simulation, SAT.

In some cases, acronyms may not be universally recognizable without appropriate context or definitions, especially for non-specialist readers.

For some solutions, commonly accepted and uniquely identifiable acronyms are not well-established, so full names are used.

Cryptographic Solutions

Cryptography was one of the first disciplines within information security. Since antiquity, militaries, diplomats and spies have used various devices and tools to encrypt, decrypt and transmit sensitive information. During the Middle Ages, cryptography continued to evolve and became more complex and diverse, including various forms of transposition and substitution ciphers.

Since the early 20th century, cryptographic technologies have evolved with a relentless stream of mechanical, electronic, and mathematical innovations, reaching such a level of development that they are now ingrained in our daily lives everywhere, becoming an invisible but integral part of almost any work with digital information.

Modern cryptographic solutions play a key role in protecting processing data and systems. Cryptographic technologies are present in very many classes of information security systems. Nevertheless, we decided to distinguish several classes of general and specialized cryptographic solutions.

- ES (Encryption Solutions) is a general class of solutions that protect data by converting it into an encrypted form that can only be accessed with the correct key. Encryption solutions cover a wide range of tools and technologies designed to encrypt data, whether at rest or in transit. This can include custom encryption applications, encryption protocols for secure communications (e.g. SSL/TLS for Internet traffic), and encryption services provided by cloud providers. The goal of these solutions is to ensure data confidentiality and integrity by converting readable data into an unreadable format that can only be reversed with the correct decryption keys.

- FDE (Full Disk Encryption) is a class of software or hardware solutions designed to encrypt all data on the hard disk of a computer or other device. This approach protects information at the whole disk level, including system files, programs and data. The main purpose of FDE is to ensure privacy and protect the data on the device from unauthorized access, especially in the event of loss or theft. The two varieties of FDE are software and hardware solutions. FDE systems are associated with TPM technology. One of the first FDE systems, Jetico BestCrypt, was developed in 1993. Common FDE solutions built into operating systems are Microsoft BitLocker and Apple FileVault.

- Password Management Tools (Password Managers) are designed to simplify and improve the processes of creating and storing passwords. The functions of password managers include generating strong passwords, storing and organizing passwords, automatically filling out forms, and changing passwords. Varieties of password management tools include personal password managers; team password managers that provide password sharing functionality for workgroups; privileged password managers; and enterprise password managers (EPMs). The first software password manager, Password Safe, was created by Bruce Schneier in 1997 as a free utility for Microsoft Windows 95. As of 2023, the most commonly used password manager was Google Chrome’s built-in password manager.

- EPM (Enterprise Password Management) is an evolution of password managers in application to the entire organization. These solutions are designed for centralized password management, providing management, monitoring and protection of privileged accounts for both user and service accounts in organizations. EPM features include centralized password management, automatic password updates, password activity tracking, regular auditing to ensure compliance with security policies, and role-based access management. The two varieties of EPM are onshore and cloud-based. EPM solutions are related to PAM solutions. Password management solutions in the corporate environment started to evolve in the early 2000s.

- TRSM (Tamper-Resistant Security Module) is a generic name for devices designed to be particularly resistant to physical tampering and unauthorized access. These modules often include additional physical security measures, such as self-destruct mechanisms, to prevent physical attacks or unauthorized access to the encryption keys and cryptographic operations they perform. TRSMs are needed in environments where security is a primary concern, such as military or financial institutions. The simplest examples of TRSMs are payment smart cards, which emerged in the 1970s. POS terminals and HSM devices are also examples of TRSMs.

- HSM (Hardware Security Module) is a physical computing device that protects and manages secrets. HSMs emerged in the late 1970s. Hardware security modules typically provide secure management of the most important cryptographic keys and operations. HSMs are used to generate, store and manage encryption keys in a secure form, offering a higher level of security than software key management because the keys are stored in a tamper-proof hardware device. HSMs are widely used in high-security environments such as financial institutions, government agencies and large enterprises where protecting sensitive data is critical.

- KMS (Key Management Systems) are solutions designed to centrally manage the cryptographic keys used to encrypt data. Their main task is to ensure the security, availability and lifecycle management of keys. KMSs automate the creation, distribution, storage, rotation and destruction of keys. They integrate with various applications and infrastructure, providing centralized control over encryption in enterprises. The idea of centralized cryptographic key management began to develop in the 1970s along with the growing use of cryptography, but specific KMS systems began to be actively developed and deployed in the 1990s and 2000s.

- PKI (Public Key Infrastructure) is a system used to create, manage, distribute, use and store digital certificates and public cryptographic keys. It provides secure digital signing of documents, data encryption, and authentication of users or devices in electronic systems. PKI is a key element in securing network communications and transactions, allowing participants to exchange data confidentially and authenticate each other. PKI provides a set of tools for managing asymmetric keys and certificates, both within individual organizations and entire nations. This distinguishes PKI solutions from KMS solutions, which focus on more flexible key management, but usually only within a single enterprise. The history of PKI began in the 1970s with the development of asymmetric encryption. The concept of PKI in the modern sense was developed and standardized in the 1990s. With the advent of blockchain Ethereum in 2015, decentralized PKIs began to develop.

- SSE (Server-Side Encryption) is a method of encrypting data stored on the server side, used since the early 2000s to improve data security. Server-side encryption is the encryption of data on server drives. The encryption keys are managed by the server itself or by a central key management system. This ensures that only authorized individuals have access to the data. SSE is particularly effective for protecting sensitive data in cloud storage because it prevents unauthorized access even if physical storage devices are compromised.

- TPM (Trusted Platform Module) is a hardware component that securely stores the cryptographic keys used to encrypt and protect information on a computer or other device. The TPM is installed on the device’s motherboard or embedded in the processor. The TPM concept was first introduced by the Trusted Computing Group (TCG) consortium and standardized by ISO in 2009. Since then, TPM has become a standard component in many computers, especially in the corporate sector, where security requirements are particularly high.

- ZKP (Zero-Knowledge Proof) is used to accomplish the task of communications, where one party needs to convince another party that the former knows some secret without revealing that secret other than the trustworthy fact that it exists. Silvio Micali, Shafi Goldwasser, Oded Goldreich, Avi Wigderson, and Charles Rackoff contributed to the development of the ZKP technique in the 1980s. Starting in 2020, the ZKP method has created post-quantum security systems, i.e., systems that are resistant to cryptanalysis on quantum computers. ZKP technology is finding more and more applications, from transaction security and authentication to privacy in blockchain systems and other applications where personal data protection and privacy are important.

Data Security

Not all information can be encrypted, and not all threats can be detected and eliminated. Even encrypted information can still be destroyed, blocked or corrupted, or it can leak due to user error or computer vulnerabilities.

Solutions in this group can also be attributed to some of the other groups described in this work, such as threat prevention. However, here we focus on specialized technologies in the field of structured and unstructured data security. Therefore, these solutions are singled out as a separate category. They have evolved continuously since the first databases were introduced in the 1960s.

The application of these technologies not only protects critical information, but also improves operational efficiency, increases customer confidence, and meets the growing demands of information security.

- DB Security (DataBase Security) includes security solutions to protect structured data stored in databases from unauthorized access, modification, leakage, or destruction. Some of these features, such as access control and identification, and backup and recovery, date back to the 1960s and have been actively developed since the 1980s. Other features, such as encrypting data in the database, auditing and monitoring the database, masking data in the database, and protecting against SQL injection and other types of attacks, have become popular since the 2000s.

- DAG (Data Access Governance) is a technology for managing threats of unauthorized access to sensitive and valuable unstructured data that began to develop in the 2000s. DAG mitigates both malicious and non-malicious threats by ensuring that sensitive files are stored securely, access rights are properly enforced, and only authorized users can access data. DAG also actively protects repositories that store sensitive and valuable data.

- Data Masking – These technologies replace sensitive data with fictitious, unrealistic information elements. This process preserves the format and appearance of the original data, but renders it useless to anyone trying to misuse it. Data masking is widely used to protect personal and sensitive information during data development, testing, and analysis. It helps businesses comply with privacy standards and regulations such as GDPR. Data masking came into prominence in the 2000s.

- Tokenization is a technology that replaces sensitive data with non-confidential equivalents, which are usually of a different type and format. This is how tokenization differs from data masking. For example, in the tokenization process, a sensitive data element such as a credit card number is completely replaced with a non-confidential equivalent known as a token, which has no external or exploitable meaning or value. The token is correlated with the sensitive data through a tokenization system, but does not reveal the original data. The solution started to be applied in the early 2000s and is widely used in payment processing.

- DRM (Digital Rights Management) is a technology designed to control the use of digital media and content. The first example of DRM was a system developed by Ryuichi Moriya in 1983. Active development of DRM began in the late 1990s. One of the first companies to actively implement DRM was Sony. DRM technology is used by copyright holders to control the terms of use, copying and distribution of content. This is accomplished through the use of various technical means, including encryption, watermarks, license keys, and restrictions on copying and printing functions. Although copyright compliance is considered a related (non-core) information security requirement, we have considered DRM in the context of the next class of solutions, IRM (Information Rights Management).

- IRM (Information Rights Management) or E-DRM (Enterprise Digital Rights Management, Enterprise DRM) is a technology designed to protect sensitive information in documents and electronic files. IRM solutions emerged in the late 1990s and early 2000s as an evolution of the DRM solutions described above. Unlike DRM, which is focused on individuals, IRM solutions are mainly used in organizations. One of the early pioneers in this area was Adobe, which incorporated IRM technologies into its PDF rights management products. These solutions allow you to restrict access to information and control its use even after it has left the original organization. IRM includes encrypting data and enforcing access policies that define who can view, edit, print or transmit information and how. IRM is a key element in many organizations’ data protection strategies.

- Data Classification solutions help discover, identify, categorize and label data according to its privacy, relevance and other criteria. Data classification solutions started to be widely used in the 2000s. The main purpose of these solutions is to facilitate data management, ensure compliance with regulatory requirements, and enable integration with DLP (Data Loss Prevention), encryption, and other solutions.

- DLP (Data Loss Prevention, less commonly Data Leakage Prevention) – data leakage prevention solutions began to be offered in the mid-2000s by companies such as Symantec, McAfee and Digital Guardian. These solutions provide identification, monitoring and prevention of sensitive data breaches. This includes protecting data-in-use, data-in-motion, and data-at-rest through content and contextual analysis, as well as data classification. DLP solutions control and regulate the flow of data across an organization’s network so that sensitive information does not leave predefined perimeters without permission. DLP solutions are important to protect intellectual property, comply with privacy regulations, and prevent accidental or malicious data breaches.

- DCAP (Data-Centric Audit and Protection) is a set of solutions for auditing and protecting data in data processing and storage centers. These systems monitor, analyze and protect data at all stages of its lifecycle, from creation to destruction. They enable organizations to track and analyze data access and usage, detect unusual or suspicious behavior patterns, and prevent data breaches. DCAP includes tools for data classification, access monitoring, auditing, threat protection and compliance. DCAP solutions began to evolve as a separate line of business in the early 2010s in response to increasing data security threats and compliance requirements. DCAP solutions are the result of the evolution and synthesis of technologies in information systems security, data analytics and cybersecurity.

Threat Detection

According to one generally accepted simple classification of information security methods and tools, all these methods and tools are divided into detective, preventive and compensatory. This division is rather conventional, but it corresponds to the life cycle of information security problems – from threats and exploitation of vulnerabilities to incidents and damage from them. Therefore, such a categorization is useful for training.

Obviously, the earlier an information security threat is detected, the more effective the defense against it. Therefore, information security technologies have naturally evolved towards a proactive approach to detecting security threats, increasing the depth of analysis and adapting to search for threats in specific environments or conditions.

Some of these solutions can be categorized into other groups, such as network security. However, in order to improve the consistency of the presentation and simplify the mastering of the material, while keeping a balance between the application of narrow and broad groups of solutions, we decided to describe these solutions in the group of detection technologies.

- CCTV (Closed-Circuit Television) is a video surveillance system. It was invented by Lev Termen in 1927 and is still actively used in various security applications. CCTV is used to monitor security in real time and to investigate past events. Modern CCTV uses technology to record, process, store, analyze and play back video information, often with audio. Face recognition, license plate recognition and other identification technologies are also used.

- FIM (File Integrity Monitoring) is a technology which focuses on monitoring and detecting changes to operating system and application software files. This includes checking file integrity, system configuration, registry, and other critical elements. The primary purpose of FIM is to detect unauthorized changes that may be signs of hacking, malware, or an internal security breach. FIM works by comparing the current state of files and configurations against a known, trusted “baseline”. Any deviation from this baseline can be a sign of a security problem. The FIM concept has evolved since the 1980s. One of the first FIM Tripwire systems still in use today was created in 1992 by Eugene Spafford and Gene Kim.

- IDS (Intrusion Detection System) is a system that tracks system events and network traffic for suspicious activity or attacks. IDS compares the inspected data against known signatures or attack patterns, as well as normal behavioral profiles, to detect outliers. When present, IDS generates alerts or reports on detected incidents and provides contextual information to understand and respond to them. The earliest preliminary concept of IDS was formulated in 1980 by James Anderson. In 1986, Dorothy Denning and Peter Neumann published the IDS model that formed the basis of many modern systems.

- HIDS (Host-based Intrusion Detection System, Host-based IDS) is a subclass of IDS that monitors and analyzes incoming and outgoing traffic from a host computer or other device, as well as files and system logs, to detect suspicious or malicious activity. HIDS are designed to detect intrusions and suspicious activity on a particular computer. They can detect malware, rootkits, phishing, and some other forms of attacks. HIDS typically use signatures of known threats, anomalous behavior, or both to detect suspicious activity. HIDS solutions have evolved since the 1980s in close association with FIM systems.

- NIDS (Network Intrusion Detection System, Network IDS) is a subclass of IDS that monitors and analyzes network traffic to detect malicious activity, unauthorized access, or violations of security policies. These systems are typically embedded in active network devices (switches, routers, etc.) and monitor and analyze both external and internal network traffic for abnormal or suspicious behavior such as DoS attacks, port scans, intrusion attempts, etc. Besides analyzing network traffic and generating alerts or reports on detected incidents, NIDS also integrates with other security systems, such as firewalls, intrusion prevention systems (IPS), SIEM systems, etc. NIDS have been evolving since the late 1980s.

- IIDS (Industrial Intrusion Detection System, Industrial IDS) is an intrusion detection systems specifically designed for industrial networks such as SCADA, ICS or IIoT since the late 1990s. IIDS monitor abnormal or malicious behavior in industrial networks, which can be due to cyberattacks, equipment errors, or security policy violations. IIDS also collects information on the status of devices and process parameters on industrial networks and provides information on the nature, source and consequences of detected incidents, as well as recommendations for remediation.

- DPI (Deep Packet Inspection) is a technology for inspecting network packets based on their content in order to regulate and filter traffic, as well as to accumulate statistical data. The concept of DPI began to develop in the late 1990s. The technology allows service providers, organizations and government agencies to apply various policies and measures to ensure the security, quality and efficiency of network communications. This approach analyzes not only the headers but also the payload of network packets, at OSI model layers two (link) to seven (application). Protocols, applications, users, and other entities involved in network communication are identified and classified. Further development of the technology has allowed it to be used not only for threat detection, collection and analysis of network communication statistics for monitoring, reporting and optimization, but also for blocking malicious code, attacks, data leaks and other security breaches, as well as for implementing QoS (Quality of Service) and other traffic management mechanisms such as rate limiting, prioritization, caching and others.

- NTA (Network Traffic Analyzer, also known as network analyzer, packet analyzer, packet sniffer, or protocol analyzer) are software or hardware tools designed to monitor and analyze real-time network traffic in wired and wireless networks. NTAs provide detailed information about traffic characteristics, including the sources, destination, volume, and types of data being transmitted. Unlike NIDS, NTAs provide a broader view of network activity and are used not only for security, but also to solve a variety of network and application problems. Traffic analyzers integrate with IDS/IPS, SIEM, SOAR, and network configuration and device management tools. The history of traffic analysis begins with the interception and decryption of enemy information during World Wars I and II. Tcpdump, one of the first computer traffic analyzers, which works in the command line and is still used today, appeared in 1988. The Ethereal analyzer, which appeared in 1998, developed the NTA technology. In 2006, Ethereal was renamed Wireshark. This tool is also still popular today. In 2020, NTA solutions evolved into NDR (Network Detection and Response).

- NBA (Network Behavior Analysis) is a class of solutions designed to monitor and analyze network behavior to detect anomalies and, as an additional effect of this analysis, to detect security threats. NBA technologies include analyzing event logs and network traffic, and are used in monitoring network performance and security. NBA solutions are sometimes integrated with IDS, IPS, SIEM, EDR and other systems. NBA technologies have evolved since the early 2000s. Over time, NBA solutions have evolved to include machine learning and artificial intelligence.

- NBAD (Network Behavior Anomaly Detection) – this technology, according to one group of sources, is a component of NBA solutions, and specializes exclusively in security, and is responsible for the early detection and prevention of incidents such as viruses, worms, DDoS attacks or abuse of authority. According to another group of sources, the NBA and NBAD are one and the same. We have reason to believe that some NBA vendors have focused on security functionality in their systems, while some NBAD vendors, on the contrary, have gone beyond security functionality, blurring the line between these two classes of solutions.

Threat Prevention

After reviewing the various tools and techniques for detecting information security threats, let’s move on to the next stage in the lifecycle of security problems and solutions – threat prevention. If threat detection is the first step to security, then threat prevention is the most active phase of threat management.

In this section, we will focus on hardware and software solutions that not only detect threats, but also actively prevent them from materializing, providing a higher level of protection for systems and data. We will look at how modern technologies and innovative approaches can act as a barrier against a variety of threats, ranging from computer viruses and hacker attacks, to protecting websites and individual critical server components.

As with the threat detection solutions described above, some of the classes of solutions described below may be included in other groups of solutions, such as network security or endpoint protection. However, given the functional nature of our categorization, we decided to describe them here to improve understanding of the material.

- AV (Antivirus) is a specialized program for detecting and removing malicious software such as viruses, Trojans, worms, spyware, adware, rootkits and others. Antivirus prototypes appeared in the 1970s, and the first popular antiviruses appeared in the 1980s. An antivirus protects your computer from malicious code infections and repairs corrupted or modified files. Antivirus can also prevent malicious code from infecting files or the operating system by using proactive protection, which analyzes program behavior and blocks suspicious activities. Most of today’s commercial anti-malware has expanded their capabilities and moved into the EPP (Endpoint Protection Platforms) solution category.

- NGAV (Next-Generation Antivirus) or NGAM (Next-Generation Anti-Malware) is a modern approach to anti-malware development that goes beyond traditional antivirus by offering a broader and deeper level of protection. NGAV uses advanced techniques such as machine learning, behavioral analysis, artificial intelligence and cloud technologies to detect and prevent not only known viruses and malware, but also new, previously unknown threats. These systems are capable of analyzing large amounts of data in real time, providing protection against sophisticated and targeted attacks such as zero-day exploits, ransomware and advanced persistent threats (APTs). The term “Next-Generation Antivirus” began to be used in the mid-2010s. CrowdStrike and Cylance were among the first companies to start promoting the idea of NGAV.

- IPS (Intrusion Prevention System) is a system designed to detect and prevent unauthorized access attempts and other attacks on computer networks and individual computers. The first IPS systems appeared in the early 1990s as a logical evolution of intrusion detection systems (IDS). Unlike IDSs, IPSs not only detect suspicious activity but also take steps to neutralize it, making them more effective in preventing attacks than IDSs. On the other hand, typically, any IPS system can operate in IDS mode. Therefore, the compound abbreviation IDS/IPS is used quite often, less often IDPS or IDP System. Cisco Systems and Juniper Networks were among the first to offer IPS solutions commercially.

- HIPS (Host-based Intrusion Prevention System) is a subclass of IPS systems and an evolution of HIDS systems to protect against threats at the level of the individual host – a computer or other device. HIPS analyzes and monitors incoming and outgoing traffic, application activity, and system changes on the host, detecting and blocking suspicious activity that may indicate intrusion or malicious behavior. HIPS systems can use a variety of techniques, including signature analysis, heuristic analysis, and monitoring changes to the system registry, file systems, and important system files. HIPS systems have been actively evolving since the 2000s and have been integrated into more sophisticated classes of information security solutions in recent decades.

- NIPS (Network Intrusion Prevention System) is a subclass of IPS systems and an evolution of NIDS systems for preventing unauthorized access or other breaches in computer networks. NIPS can be implemented as either physical devices or software, and are often integrated with other security systems, such as firewalls. NIPS continuously analyzes network traffic in real time and can automatically block suspicious or malicious traffic based on predefined security rules and threat signatures. NIPS works by deeply analyzing packets and sessions, applying techniques such as signature analysis, anomaly analysis and behavioral analysis to identify and block attacks such as DoS (Denial of Service), DDoS, exploits, viruses and worms. One of the first NIDS/NIPS, created in the 1990s and still in use today, is the Snort application. The evolution of NIDS into NIPS intensified in the 2000s with Cisco, Juniper Networks, and McAfee. In recent decades, NIPS has been integrated into more sophisticated classes of information security solutions.

- NGIPS (Next-Generation Intrusion Prevention System) is a subclass of IPS systems that provides deeper and more intelligent analysis of network traffic, including encrypted traffic, as well as application policy enforcement by users. NGIPS integrates with advanced technologies such as machine learning, behavioral analytics, and deep data analytics to detect and prevent not only known threats, but also previously unknown or specific attacks such as zero-day exploits and advanced persistent threats (APTs). These systems can adapt and respond to the changing threat landscape, providing protection in dynamic and complex network environments. NGIPS are often integrated with SIEM systems. The NGIPS concept began to evolve in the late 2000s and early 2010s. FireEye, Palo Alto Networks and Cisco, contributed to the development and popularization of NGIPS.

- ATP (Advanced Threat Protection) is a comprehensive class of cybersecurity solutions designed to protect organizations from sophisticated threats such as targeted attacks, zero-day exploits, and the Advanced Persistent Threat (APT – do not confuse with ATP). ATP provides protection at multiple layers, including network, endpoints, applications and cloud services, using various techniques such as machine learning, behavioral analysis, sandboxing and advanced traffic analysis. ATPs integrate with various security infrastructure components to provide centralized management and coordination of security measures. ATP solutions were proposed in the 2000s and began to be actively promoted in the mid-2010s by companies such as Symantec.

Corrective and Compensatory Solutions

Continuing the sequence started in the previous two sections when describing detecting and preventing solutions, it is logical to describe the third type of security measures in this series – corrective and compensating solutions. Their difference from detecting and preventive solutions is in the key way they work, aimed at correcting security violations that have already occurred or at reducing the damage caused by them, including prosecution of security violators.

Technically, to complete the picture, cyber-attack tools could also be included in this group, but such activities are on the border of legality, so we will not describe these tools.

In its purest form, corrective measures are those or other measures of backup recovery of failed data, software or hardware, or services, and compensatory measures are services of insurance companies to compensate for damage after security incidents when recovery by other means is impossible. However, under certain assumptions, some other methods and tools can also be included in this group.

- Backup and Restore systems are a critical class of hardware and software solutions used to ensure data integrity and availability by backing up important information so that it can be restored in the event of loss or corruption. The key functions of these systems are regular automatic backups, data encryption, data recovery and version control. There are local, cloud and hybrid backup systems. Backup methods and tools appeared in the pre-computer era, organically developed with the development of computing technology and are now integrated with EPP, DB Security and many other solutions and technologies.

- HA (High-Availability) solutions are the evolution and automation of redundancy technologies for real-time operations. HA systems minimize downtime and ensure that business-critical applications remain at least partially available to users even in the event of failures or disasters. The main functions of HA solutions are data replication, automatic switching, load balancing, monitoring and management. The main technical approaches to HA implementation are clustering, failover backup and distributed systems. HA technologies have been developed since the beginning of computing. Since the 1980s, HA solutions have become more affordable and diverse.

- FT (Fault-Tolerance) solutions are an evolution of HA solutions. With few exceptions, FT solutions are superior to HA solutions in terms of functionality. FT solutions have higher levels of load balancing, redundancy and data integrity. This results in higher complexity, quality (data and service availability) and cost. On the other hand, the scalability of FT solutions is usually lower than HA due to the embedded architecture. The first known fault-tolerant computer was created in 1951 by Antonin Svoboda. Later NASA, Tandem Computers and other organizations contributed to the development of FT technologies.

- DRP (Disaster Recovery Planning, Disaster Recovery Solutions) is a set of measures and tools aimed at minimizing the consequences of disasters: natural disasters, major technological accidents, cyberattacks and other similar incidents that can lead to disruptions not only in the IT infrastructure, but also in the entire organization. Unlike Backup and Restore solutions, the DRP process considers backing up not only data, but also any other critical assets: software, hardware, communications, key vendors, etc. Unlike HA and FT systems that operate automatically in real time, DRP solutions often focus on manual recovery from relatively rare events, although modern DRP systems utilize automated failover to redundant systems and infrastructure. The concept of disaster recovery has been evolving since the mid-1970s. As it evolved, DRP processes and solutions became incorporated into the corporate process of Business Continuity Planning (BCP), which had been evolving since the 1950s. Since the late 1990s, the term BCDR (Business Continuity and Disaster Recovery) has been used to combine DRP and BCP.

- Patch Management Systems – systems for managing “patches” and other software updates. The purpose of patch management is to keep information systems updated and protected from known vulnerabilities and bugs. The main functions of patch management are inventory of software and hardware in an organization, vulnerability monitoring, patch testing, patch deployment, and reporting and auditing. Patch management systems integrate with IDS/IPS, anti-malware, configuration management systems, and vulnerability assessment tools. The term “patch” is a literal one. Since the invention of punched cards in the 18th century, erroneous holes have been made in them for various reasons. Since about the 1930s, with the advent of IBM calculating machines and punched cards, errors in them were actively corrected by paper “patches” (physical stickers) with correct holes, which were glued directly onto the punched cards.

- IRP (Incident Response Platform) is a set of solutions designed to automate responses to information security incidents. The main goals of IRP are to effectively respond to incidents, minimize their impact and prevent recurrence. IRP functions include supporting automated threat response scenarios, tracking and analyzing incidents, recording incident lifecycle stages, providing tools for incident analysis and research, communication and response coordination, reporting and documentation, analyst training, etc. IRP systems integrate with IDS/IPS, TIP, SIEM, EDR, SOAR and other systems. IRP solutions began development in the early 2000s and evolved into SOAR by the early 2020s.

- Forensic Tools are a specialized class of software and hardware tools designed to collect, analyze, and present data collected from digital devices in the context of investigating cybercrime or information security incidents. The main purpose of these tools is to provide evidence, including evidence admissible in the courts of certain jurisdictions, when investigating crimes related to computers and networks, and to assist in the analysis of IS incidents such as unauthorized access, fraud or data breaches. Investigative tools can be categorized by the type of objects investigated: computers, mobile devices, networks, and the cloud. The use of non-specialized investigative tools evolved in the 1980s. In the 1990s, several specialized free and proprietary tools (both hardware and software) were created to allow investigations to be conducted with the guarantee of media immutability, which is one of the main requirements for securing evidence. Law enforcement agencies such as the FBI and Interpol have played an important role in the development of digital forensics tools. DFIR (Digital Forensics and Incident Response) solutions are a combination of IRP and investigative tools.

Deceptive Solutions

This group describes special solutions that covertly make it more difficult to realize security breaches. Indirectly, this is accomplished by hiding actual objects or procedures from attackers, or by directly misleading attackers. This distinguishes these solutions from any other solution that protects assets overtly and relies on, for example, access regimes, password secrecy, or the mathematical strength of encryption.

Hiding technology solutions are based on the full or partial masking of information or its protection. The approach to hiding information security tools and techniques is called “Security through Obscurity”. This approach is often criticized as being insufficiently robust. Although some elements of this approach are still in use, it is seen more as a complement to more robust and transparent security mechanisms. At the same time, solutions for hiding the information itself are developing quite actively.

Solutions based on deception technologies are based on the principle of creating a misleading environment or conditions for attackers to make it more difficult to realize unauthorized access or other information security breaches. The effectiveness of these solutions lies in manipulating attackers and forcing them to spend time and resources on work that does not accomplish their goals. An additional goal of some solutions in this group is to be able to study the actions of attackers in conditions close to the real ones, without putting at risk the real resources of the organization.

- Obfuscation is an information hiding technique involving various methods of masking or distorting data, code, or communications, making them difficult to read and difficult to analyze without special knowledge or keys. This technique is often used in software development to partially protect source code from reverse engineering and is useful for protecting intellectual property. Obfuscation is also used to hide sensitive data during transmission. Obfuscation techniques include changing data formats, using special algorithms to change code structure, and many other methods. Not only do they increase the complexity of human understanding of data, but they can also make automated data analysis more difficult, for example, when malware attempts to recognize protected data. Obfuscation originated in the 1970s, evolved among virus writers from the 1980s, and, as a method of data and code protection, began to develop in the 1990s.

- Steganography is a method of hiding the fact that information is being transmitted or stored. In digital steganography, data is often embedded in digital media files using various algorithms that can change, for example, the minimum color bits of pixels in an image or the amplitude of audio files. Digital steganography began to be used in the 1980s and has since evolved into computer and network steganography systems. Computer steganography, unlike general digital steganography, is based on the specifics of the computer platform. An example is the StegFS steganographic file system for Linux. Network steganography is a method of transmitting hidden information through computer networks using the peculiarities of data transfer protocols.

- Entrapment and Decoy Objects are false targets such as fake files, databases, control systems, or network resources that appear valuable or vulnerable to attack. Traps and decoys are used to detect unauthorized activity as well as to analyze attacker behavior. The concept of traps was first proposed by the FIPS 39 standard in 1976. These technologies have been actively developed since the 1990s in the form of honeypots and honeynets solutions. One of the first documented examples of a honeypot was created in 1991 by William Cheswick. Honeynets are networks composed of multiple honeypots. Unlike single honeypots, which simulate individual systems or services, honeynets create a more complex and realistic network environment in which multiple traps can interact. This allows for the exploration of more complex and targeted attacks, as well as attacker behavior in a broader context. The Honeynet project, launched by Lance Spitzner in the late 1990s, is one of the first and best known examples of the use of honeynets.

- Deception Technology is a further development of trap and bait technologies, and represent a broad class of solutions involving various techniques and strategies for creating false indicators and resources on the network. These solutions include not only honeypots and honeynets, but also other means such as false network paths, fake accounts, and data manipulation to mislead attackers and divert them from real assets. Since the 2010s, Israeli companies such as Illusive Networks and TrapX have contributed to the development of deception-based enterprise products.

- Tarpits are solutions that slow or thwart automated attacks such as port scanning or the spread of worms and botnets. Tarpits solutions work by establishing an interaction with an attacker and deliberately delaying that interaction, causing the malware to take significantly longer than usual. This is accomplished by intentionally slowing down responses to network requests or creating false services and resources that seem interesting to the attacker. One famous example of a “tar pit” is LaBrea Tarpit, created by Tom Liston in the early 2000s. This program was designed to combat the Code Red worm, which was spreading rapidly by scanning and infecting web servers. LaBrea effectively slowed down the scanning by creating virtual machines that appeared vulnerable to the worm but were actually traps.

Identity and Access Management

This section describes solutions that play a specific role in ensuring that the right people have access to the right resources at the right time and with the right privileges.

IAM (Identity and Access Management) is the generic name for processes and solutions aimed at managing user identities and controlling access to resources. These systems authenticate users’ identities, manage their access rights, track their actions, and ensure data security and confidentiality. IAM includes authentication, authorization, user management, role and access policy management, and integration with various applications and systems.

The history of IAM begins long before the computer age. Seals and passphrases used by ancient civilizations were the first prototypes of IAM. The first models (MAC – Mandatory Access Control, DAC – Discretionary Access Control, etc.) and the first implementations of access control systems began to take shape in the late 1960s.

More modern access models (RBAC – Role-Based Access Control, ABAC – Attribute-Based Access Control, etc.) as well as IAM as integrated systems have been actively developed since the 1990s. Beginning in the 2000s, IAM solutions began to utilize the RBA (Risk-Based Authentication) method.

With the constant evolution of security threats and tightening security compliance requirements, IAM solutions are becoming not just a security measure, but a strategic tool that helps organizations not only protect their assets, but also improve efficiency and productivity.

- SSO (Single Sign-On) is an authentication method that allows a user to access multiple applications or systems by entering their credentials only once. It is a convenient access control method that greatly simplifies the process of logging into various corporate or private services. SSO eliminates the need to remember multiple passwords, which reduces the risk of losing or compromising them. There are different types of SSO, including enterprise SSO (for an organization’s internal systems), web SSO (for online services), and federated SSO (which uses standards such as SAML and OAuth to authenticate across different domains). The concept of SSO began to evolve in the 1980s as organizations looked for ways to simplify account management in the face of a growing number of computer systems and applications. The first SSO solutions were provided by Hewlett-Packard, CA Technologies, Oblix, Magnaquest Technologies and Novell. The development of cloud technologies in the 2000s has greatly expanded the needs and opportunities for SSO applications.

- Multi-Factor Authentication (MFA) is a method and technology that requires a user to provide two or more proofs of identity before accessing a resource. A special case of MFA for two factors is Two-Factor Authentication (2FA). MFA significantly increases security by combining multiple independent confirmations, usually of different kinds: something the user knows (such as a password or code), something the user has (such as a smartphone or token), and something that is part of the user (such as a fingerprint or facial biometrics scan). The emergence of the preconditions of 2FA dates back to the 1980s. The first 2FA is most often cited as the first transaction authorization system based on the exchange of codes via two-way pagers, which was created in 1996 by AT&T. The first MFA solutions often involved the use of physical tokens or special cards. With the development of smartphones and biometric technologies since the early 2010s, MFA methods have become more diverse and affordable.

- IDM (Identity Management, IdM) are solutions that manage digital user identities. This includes the processes of creating and deleting user accounts and identities, as well as other management operations. IDM is sometimes used synonymously with IAM, but it is more accurate to think of IDM as one of the functions of IAM. While IDM focuses on identity management, IAM provides a more comprehensive approach, including mechanisms to protect data and resources from unauthorized access.

- WAM (Web Access Management) is a class of solutions designed to manage access to web applications and online services. The main purpose of WAM is to provide secure, controlled, and convenient access to web resources for authorized users. The functions of WAM are authentication and authorization, SSO, auditing and monitoring, and session management. There are various WAM solutions designed for different types of applications and environments, including cloud services, enterprise portals, and public websites. The development of WAM began in the late 1990s in the form of SSO solutions. In the 2000s, WAM started to become a separate class of solutions and, by now, has begun to lose relevance, giving way to modern IAM.

- PIM (Privileged Identity Management, Privileged Account Management or Privileged User Management, PUM) is a technology for managing accounts that have access to sensitive data or critical systems. These include, for example, accounts for super users, system administrators, database administrators, service administrators, etc. PIM focuses on the creation, maintenance and revocation of accounts with elevated permissions. PIM tools typically provide support for privileged account discovery, account lifecycle management, strong password policy enforcement, access key protection, and access monitoring and reporting. The concept of PIM emerged in the early 2000s.

- PAM (Privileged Access Management or Privileged Account and Session Management, PASM) is a class of solutions that manage access to critical systems and resources in an organization. PAM can be considered an extension of PIM because PAM provides a broader range of features for privileged access management. For example, timely privilege assignment, secure remote access without a password, session recording capabilities, monitoring and controlling privileged access, and detecting and responding to suspicious activity. The concept of PAM began to evolve in the early 2000s. The functions and variations of PAM include PSM (Privileged Session Management), EPM (Endpoint Privilege Management), WAM (Web Access Management), PEDM (Privilege Elevation and Delegation Management), SAG (Service Account Governance), SAPM (Shared Account Password Management), AAPM (Application-to-Application Password Management or Application Password Management, APM) and VPAM (Vendor Privileged Access Management).

- IGA (Identity Governance and Administration) is a class of solutions for managing user identity, access to resources, and compliance with relevant policies and regulations. IGA solutions began to grow rapidly after the passage of the U.S. HIPAA laws in 1996 and SOX in 2002. IGA solutions manage the identity lifecycle, implement access policy and role management, and provide auditing and reporting. IGA solutions can be viewed as a complement or extension of IAM solutions. The integration of IGA with PAM represents PAG (Privileged Access Governance) technology.

- PIV (Personal Identity Verification) solutions are solutions to provide strong identity verification for U.S. government and contractor employees. PIV solutions are designed to strengthen the security of access to the physical and information resources of government agencies. This is achieved through the use of PIV cards that contain biometric data (fingerprints, photos) as well as electronic certificates and pin codes for access to information systems and physical facilities. The FIPS 201 standard, developed by NIST and describing PIV, was approved in 2005.

- IDaaS (Identity as a Service) is a cloud-based service that provides end-to-end IAM solutions. IDaaS is designed to provide secure flexible and scalable management of digital user identities, including authentication, authorization and resource access accounting. IDaaS includes SSO, MFA, role-based access control (RBAC) and policy management, account synchronization, integration with various cloud and on-premises applications, etc. IDaaS is tightly integrated with other security systems, such as DLP, CASB and SIEM systems, providing a comprehensive approach to cybersecurity. The IDaaS concept began to evolve in the early 2000s with the proliferation of cloud technologies. Among the first companies to offer IDaaS solutions were Microsoft, Okta and OneLogin.

Network Access Security

Providing secure access to network resources has been at the center of cybersecurity with the massive proliferation of the Internet since the beginning of the 21st century. Each of the secure network access solutions described below offers specific techniques and strategies for securing a highly dynamic and diverse network environment.

Consider how these technologies help organizations adapt to new cybersecurity challenges by providing reliable access control, protection from external and internal threats, flexibility and scalability in an ever-changing landscape of IT infrastructure and business processes. This section will provide you with an understanding of modern approaches to secure network access, their features, evolution, benefits, and potential applications as part of a comprehensive cybersecurity strategy.

- VPN (Virtual Private Network) is a generic term for technologies that enable one or more secure network connections over another network. A VPN can provide three types of connections: host-to-host, host-to-network, and network-to-network. VPNs are used to protect network traffic from interception and tampering. Protection is provided through the use of cryptography. VPNs are often integrated with firewalls, IDS/IPS systems, and IAM solutions. The first prototypes of VPNs appeared in the 1970s. Between 1992 and 1999, the first popular VPN protocols, IPsec and PPTP, also known as PPTN, were developed. Other examples of VPN protocols include L2TP and WireGuard. Some VPN implementations use SSL/TLS, MPLS technology, or other protocols and technologies. Cisco and Microsoft have made key contributions to the development of VPNs. In 2001, an implementation of OpenVPN appeared and quickly became popular. Wireguard appeared in 2016 and was included in the Linux kernel in 2020, and it is currently actively supplanting OpenVPN as a more performant and simpler solution.

- DA (Device Authentication) – various technologies and methods used to authenticate devices in digital networks and systems. The main functions are device identification and authentication, encryption and access control. Types of solutions differ in the basis of authentication: MAC addresses, certificates, unique device identifiers (e.g., IMEI), etc. Device Authentication began to develop in the 1980s. The development of Device Authentication technologies led to the concept of NAC, described below. With the development of the Internet of Things (IoT), mobile technologies, and BYOD (bring your own device) policies, device authentication has become even more important for securing the networks to which these devices connect.

- NAC (Network Access Control) is a standard and broad class of network access control solutions that includes identifying and authenticating users, verifying the status of their devices, and enforcing security policies to control access to network resources. NAC solutions help secure the network by controlling access based on certain client computer criteria, such as user role, device type, location, and device state (anti-malware status, system updates, etc.). The concept of NAC began to evolve in the early 2000s. The basic form of NAC is Port-based Network Access Control (PNAC), defined by the IEEE 802.1X standard adopted in 2001. An evolution of NAC is the Trusted Network Connect (TNC) device stateful-based approach introduced in 2005. Examples of specific NAC implementations include Cisco Network Admission Control (2004-2011), Microsoft Network Access Protection (2008-2016), Cisco Identity Services Engine (ISE), Microsoft Endpoint Manager, Fortinet FortiNAC, Aruba ClearPass, and Forescout NAC solutions.

- ZTA (Zero Trust Architecture) is a security architecture based on the principle of zero trust (“trust no one, verify everything”). ZTA integrates with a wide range of network and security technologies, including identification, authentication, data protection, encryption, network segmentation, monitoring, and access control. The term “zero trust” was coined by Stephen Paul Marsh in 1994. The OSSTMM standard released in 2001 placed a lot of emphasis on trust, and the 2007 version of this standard asserted “trust is vulnerability”. In 2009, Google implemented a zero-trust architecture called BeyondCorp. In 2018, the NIST and NCCoE organizations published the SP 800-207 Zero Trust Architecture standard. After that, the widespread adoption of mobile and cloud services began to spread ZTA solutions.

- SDP (Software-Defined Perimeter) is a network security model based on the creation of dynamically configurable perimeters around network resources. The SDP model can be seen as one way to implement the ZTA architecture. Unlike NAC, the more modern SDP model does not control a user’s or device’s access session to the entire network, but rather each access request to each resource individually. SDP uses cloud technology and software-defined architectures to create tightly controlled access points that can adapt to changing security requirements. SDP is also referred to as “black cloud” because the application infrastructure, including IP addresses and DNS names, is completely inaccessible to unverified devices. SDP is related to IAM systems, firewalls, IDS/IPS systems, and cloud security gateways. The concept of SDP began to evolve in the late 2000s. The Defense Information Systems Agency (DISA) and Cloud Security Alliance (CSA) made key contributions to the development of SDP.

- ZTNA (Zero Trust Network Access) is one of the core technologies within the ZTA framework. Unlike traditional security models, ZTNA makes no assumptions about trust and requires strict verification of origin and context for every resource access request, regardless of where the request comes from. ZTNA solutions are related to SDP and SASE as well as IAM solutions. Unlike SDP solutions, which cover not only application access but also network infrastructure management, ZTNA solutions focus on user access management. ZTNA is often seen as a more secure, flexible and modern alternative to traditional VPN solutions.

- SASE (Secure Access Service Edge) is a network architecture that integrates comprehensive network security and broadband access functions into a single cloud-based platform. SASE was designed to provide secure, efficient access to network resources in the face of an increasing number of remote users and distributed applications. It is a groundbreaking model proposed by Gartner in 2019 that redefines traditional approaches to network security and access. SASE integrates features such as SD-WAN (software-defined wide area network), secure web gateway, cloud access to secure resources (CASB), intrusion detection and prevention (IDS/IPS), and more.

- ZTE (Zero Trust Edge) is a concept that combines the principles of ZTA with networking technologies at the edge of the network (edge computing). This approach aims to provide strong security for network resources and applications hosted at the edge of the network, especially with the increasing number of remote users and the growing use of cloud and mobile technologies. ZTE solutions are closely aligned with ZTNA, SDP and SASE. The ZTE model proposed by Forrester in 2021 is similar to SASE, but with an additional focus on implementing zero-trust principles for user authentication and authorization.

Network Security

This section is devoted to an overview of solutions designed to protect the network infrastructure. These are mainly various proxy solutions in the form of filters, gateways, etc. This group does not include secure network access solutions and some other solutions that were described in the different groups above, as well as mobile, cloud and other solutions described below.

Each tool in this group plays a different role in creating a layered defense strategy. Let’s look at how these various solutions can be integrated to form a flexible, effective network defense, and review their key features, benefits and potential applications. We will also describe the subgroups and evolution of network security technologies.

- FW (Firewall) is a basic network device or software solution designed to control and filter incoming and outgoing network traffic based on predetermined security rules. The main purpose of a firewall is to protect computers and internal networks from unauthorized access and various types of network attacks, ensuring the security of data and resources. The main types of firewalls are packet filters, Stateful Inspection Firewalls (SIF), Application Layer Firewalls (ALF), and Next-Generation Firewalls (NGFW). Firewalls are often used in conjunction with other security systems, including IDS/IPS, SIEM, and VPN systems. The first work on firewall technology was published in 1987 by engineers at Digital Equipment Corporation (DEC). AT&T Bell Labs also contributed to the development of the first firewalls.

- DNS Firewall is a solution for monitoring and controlling DNS queries to prevent access to malicious or suspicious sites. DNS Firewalls differ from regular firewalls in that they focus solely on DNS traffic. DNS Firewalls can be implemented as hardware appliances, software, or cloud services. DNS firewalls are often integrated with IDS systems, anti-malware, and other types of firewalls. DNS firewalls were introduced in the late 1990s.

- SIF (Stateful Inspection Firewall, Stateful Firewall) is a type of firewall that not only filters traffic based on source and target IP addresses, ports, and protocols, but also monitors and accounts for the states of active network connections (sessions). This allows it to dynamically manage traffic based on session context, offering more effective protection than simple packet filters. SIFs operate at the network, session and application layers of the OSI model. SIFs are often used in conjunction with IDS/IPS, IAM, and VPN systems. SIF was invented in the early 1990s by Check Point Software Technologies. SIFs have become the basis for many modern cybersecurity solutions, including NGFW.

- ALF (Application-Level Firewall or Application Firewall) is a type of firewall that monitors and, if necessary, blocks connections and I/O to applications and system services based on a customized policy (set of rules). An ALF can control communication down to the application layer of the OSI model, such as protecting Web applications, e-mail, FTP, and other protocols. The two main categories of application layer firewalls are network and host firewalls. ALF is often integrated with IDS/IPS and IAM systems. ALF development began in the 1990s through the efforts of Purdue University and AT&T and DEC.

- WAF (Web Application Firewall) is an application layer firewall (ALF) designed to protect web applications and APIs (application program interfaces) from various types of attacks such as XSS (cross-site scripting), SQL injection, CSRF (cross-site request forgery), etc. The varieties of WAFs are hardware, cloud and virtual. WAF sits in front of web applications in the path of requests to them and are often integrated with IDS/IPS, CMS and SIEM systems. The first WAFs were developed by Perfecto Technologies, Kavado and Gilian Technologies in the late 90s. In 2002, the ModSecurity open source project was created, which made WAF technology more accessible. WAF solutions are being actively developed.

- WAAP (Web Application and API Protection) is a class of solutions designed to secure web applications and APIs. It includes the features of a traditional WAF and extends them with additional protective measures. Unlike traditional WAFs, WAAP provides more comprehensive protection, including API protection, cloud services, and advanced threat intelligence. WAAP can be implemented as cloud services, hardware solutions, or integrated platforms. WAAP is often integrated with IDS/IPS and SIEM systems. The WAAP concept was proposed by Gartner in 2019.

- SWG (Secure Web Gateway) is a solution designed to monitor and manage inbound and outbound web traffic to prevent malicious activity and enforce corporate policies. SWGs are typically implemented as proxy servers and are placed between users and Internet access. The functions of SWGs include web content filtering and caching, malware protection, application and user access control. SWGs can be deployed as hardware appliances, software solutions, or as cloud services. SWGs are often integrated with IDS/IPS systems, anti-malware solutions, firewalls and DLP. SWG solutions began to appear in the 1990s.

- NGFW (Next-Generation Firewall) is a class of firewall that not only performs standard traffic filtering functions, but also includes additional capabilities to provide deeper and more comprehensive network security. NGFWs are implemented as a device or software. NGFW technologies include packet filtering, SIF, DPI, IDS/IPS, ALF, anti-malware, URL and content filtering, etc. NGFW solutions are tightly integrated with SIEM, IAM, and cloud services and mobile devices. In 2007, Palo Alto Networks introduced, in fact, the first NGFW, although the term was coined by Gartner later, in 2009. The creation of the NGFW fundamentally changed previous approaches to filtering network traffic.

- UTM (Unified Threat Management) is a comprehensive solution that integrates multiple security functions into a single device or software package. The main functions of UTM are firewall, IDS and IPS. Additional UTM functions are gateway anti-malware, ALF, DPI, SWG, traffic filtering, spam and content filtering, DLP, SIEM, VPN, network access control, tarpit, DoS/DDoS protection, zero-day attacks, etc. Depending on the specific implementations of NGFWs and UTMs, in practice these solutions either do not differ in functionality or differ in customization capabilities – UTMs offer a wider range of versatile built-in features than NGFWs, often offering functions that are more flexible in customization. UTM solutions began to develop in the early 2000s, thanks to Fortinet and a few others. Then, in 2004, IDC coined the term UTM.